Netflix's The Social Dilemma is a dramatic wake-up call to the inherent dangers of social media.

Although the screenshot above screams the sky is falling and the film has other sensationalist moments, the overall tone is more After School Special than Chicken Little. As the credits roll, the experts even offer practical tips for dealing with social media in your personal life). But the film’s most important contribution is providing viewers with a powerful mental model for how social media platforms operate vis-à-vis their users. If you watch the film, you won’t be able to look at your social media feeds in the same way.

As a wake-up call, it is highly effective. It brings to life social media’s potential for abuse and addiction and to shape our perceptions of reality. It explains the underlying attention-based business models and algorithm-powered technologies of social media platforms in an accessible and powerful way. Moreover, it shows how their combination can take advantage of our psychology and accelerate extremism.

Even if you think the actual harm caused by social media to date is overblown, the film suggests you shouldn’t take much comfort for the future: these problems are likely to magnify as the AI-powered algorithms continue to rapidly improve and human users and society are much slower to adapt.

Note: spoilers below

A narrative solution?

As a wake-up call, it works. The message is clear: Something must be done! But as “Dilemma” suggests, the film ultimately raises more questions than it provides answers. However, in a notable way, the film itself is part of the solution.

If social media’s superpower is the ability to personalize and micro-target using seemingly infinite amounts of data, film’s superpower is life-like, multi-sensory storytelling. The Social Dilemma uses that superpower to bring Facebook’s[1] algorithms to life.[2] In between standard documentary fare—interviewer-less shots of former employees and executives, academics and cultural observers—the film interweaves the story of a fictional American family using and succumbing to the dangers of social media.

The toy narrative is bookended with two dramatic examples:

Towards the start, a family dinner ends in chaos. Mom tries to get everyone’s full participation by storing all family members’ cell phones in a plastic time-lock container for one hour. But within minutes, the dinner ends with a literal bang. One of the teenage daughters—embodying full-blown social-media addiction—smashes the container to pieces with a hammer to get to her phone.[3]

Later, near the end of the film, the two other siblings end up in handcuffs face down on the ground. The teenage son had attended a demonstration after being radicalized to the fictional “Extreme Center” by propaganda and conspiracy theories promoted to him in his social media feeds. His sister is trying to take him home but is also swept up.

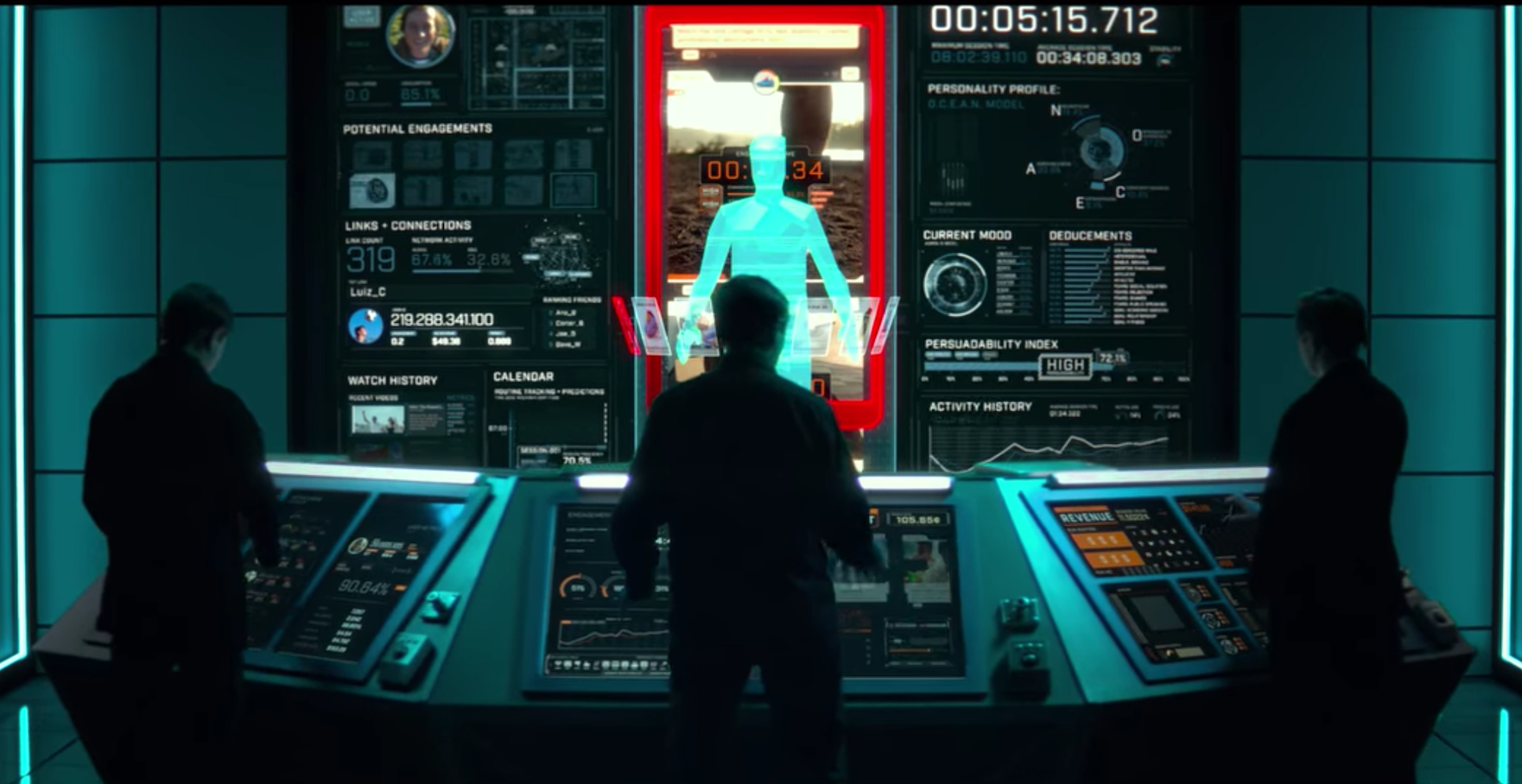

The fictional narrative and its characters are often simplistic and shallow. But that is wholly appropriate for the mini-story’s main character: the Facebook algorithm anthropomorphized. This representation is the film’s biggest contribution.

Do you realize what you’re up against?!?

The algorithm is literally brought to life in the form of three lookalike guys operating a vast Minority Report style control room that is entirely dedicated to the Facebook feed of a single teenage boy, the mini-story’s main character. As he is using the app, the control room operators analyze all the incoming data: how long did he pause on that picture, which links did he click, what friends are physically nearby him, how long has he been using the app this session and how does this compare to an average session. They use all that data to decide what piece of content to show next and when to show an ad—all the while trying to make sure he keeps using the app as long as possible.

When he is not using the app, the operators work to get him back on it—sending him notifications most likely to get his attention when seen and most likely to prompt further action on the app if he checks them. The control room’s digital “voodoo doll-like” model” drives home the ability of the algorithm’s stimuli to prompt robot-like responses.

Watching the film may be the first time some viewers consider how social media apps works under the hood, but the film is not revealing any secrets in the details. Nevertheless, the film’s synthesis of known components into a metaphorical whole seems revelatory even for someone familiar with how the apps work.

The three men are a nod to the social media platforms’ three goals: engagement (time spent on the app), growth (new users) and monetization (displaying ads). The algorithms care about these three things. The algorithms don’t care about anything else.

The film’s commentary emphasizes one axis of power: the vast computing power and brainpower dedicated to achieving those goals. But the story shows there is another axis of power even more important: the unyielding focus on those objectives. From that focus, necessarily follows an indifference to all other objectives.

As a result, the algorithm does not care about the actual contents of the “content” it shows you. It only cares that you keep scrolling, keep liking, keep sharing. If family pictures and cat videos do the job, the algorithm will show those. But if clickbait or misleading headlines or conspiracy theories do a better job, goodbye cat videos.

“You are the product” is often invoked in the context of privacy and personal data. But The Social Dilemma reframes the metaphor and suggests we should worry more about how these platforms demand our attention and how they change our behavior than we should worry about the data they are extracting.

If you could design a sophisticated control room to filter the information you received, I doubt you would optimize it for engagement, i.e., time spent using it. It would be like directing an elementary school teacher to focus only making sure the students have maximum fun every day. The Social Dilemma demonstrates those sophisticated control rooms do exist and maximum engagement is their goal. And we continue to rely on control rooms like this for more and more of our information.

Facebook apparently felt unfairly attacked and maligned by the film and released a statement on Friday with a list of contentions. The statement correctly points out that Facebook has taken steps to limit the worst effects suggested by the film (and by the simple depiction above), including down-ranking (but not removing) misinformation and limiting internal incentives to maximize users’ screen time. But the statement mostly misses the point. Despite its implicit hubris, Facebook cannot solve all the potential downsides of social media through its own engineering efforts and Facebook does not represent the entire social media universe even today. Facebook aims to be benign but future platforms may not.

Algorithms tailored to individual behavior increasingly will be used in technology beyond social media. Much good can come from that. But evil can too. Some skepticism is healthy. The depiction in The Social Dilemma helps.

Have you watched The Social Dilemma? Add your thoughts below.

Thanks to Job Chanasit, JD Co-Reyes and Mark Terrelonge for discussion about the film. Thanks to Noah Maier from the Compound Writing community for feedback on an earlier of this post

In the fictional mini-story within the film, the social media platform is unnamed and not specifically identified as Facebook. But the product depicted is more similar to Facebook than to any other major social network. ↩︎

It also leverages the unique strengths of film in other ways. It uses a set of dramatic interstitials reminiscent of Requiem for a Dream (showing short clips of drug use and hyper-magnified physiological changes) to associate social media with hard drug usage. ↩︎

She is seen after the fact holding a hammer and wearing safety glasses, which I found hilarious in its ridiculousness. But it also fits the Afterschool Special vibe of the mini story. ↩︎